Preamble

Lincoln’s a proper drive from my home base in Little Rock. Broken into 3 legs. First to Rogers where Megan lives. Second to KC where’s there’s Heather and another sister, Kyle on a special guest appearance. Then onto Lincoln. Each segment takes about 3 hours. Overnights in any of each. For sure meal stops.

No family in Lincoln. That’s where my Gravel Family resides. Been coming here every August since 2018. It feels like a second home. All of the necessary attributes. Lots of friends. With its good times, and bad. Triumph and failure. A place to celebrate. A place to battle demons.

Pregame

Shakeout ride was on Thursday around lunchtime. Left from the Sandhills Event Center for a little out-and-back. The first and last 12 & 1/2 miles of the course. Felt pretty good. Hung out at the expo afterwards. Checked in. Visited with some old friends and made some new ones. Like how it was to be a little kid on a bike. Hey do you ride? Me too. Let’s be friends. Simple as that.

Lots of vendors bringing with them new ideas. Get to rub elbows with some pretty amazing people. Hearing their stories. Gathering the encouragement and nerve that would be needed to complete the challenge that awaits.

Later, a nice dinner with Carmen and John, at Lazlo’s in the Haymarket. It has been awesome watching them progress from novices to experts over the past couple of years, since we first met here. Seeing their fitness gains. This year Carmen’s doing the 75. John had to step down to the 50K, but sees it as an opportunity to PR.

Lastly, visited with Kelly and Michelle, back at the event center where they were camping. We had the same conversation about who was the least prepared. You can’t trust Michelle’s assessment. She always says that she didn’t train and crushes it anyway. Same with Kelly, who’s in the Double. A 50K run Friday morning followed by 150 miles on the bike Saturday. Who does that?

Prep

Then the little shifter issue. Called Richard who runs my local bike shop. He told me to call if I get into a problem at race. Like the first Long Voyage (2021) and a bent derailleur. Lost the middle gears. Affected the outcome. Not going there again. Richard got me going. It was stressful and in a weird way, beneficial. Burned off the last of the pre-race jitters with a blow torch of anxiety. Let’s call it a test.

Start

The 17:00 start Friday was delayed. Not quite fifteen minutes. A front had passed over dropping temps by at least 10 degrees. How lucky can we get? Carmen and John were there which helped calm the nerves.

Found Andy at the starting line. He’d been called up for being a possible five time finisher. The only one who has ever been eligible as the race is only five years old and there are only so many crazy people that get past their first or second. This would be our third time teaming up.

And we’re off. Don’t know how many, maybe 50 or 75. Not a lot. Sent under a hail of enthusiasm and positive energy. It felt good. Any remaining anxiety gets burned away. Knew we’d better soak up these good vibes. Gonna need it.

It did rain a bit with some wind. Not much lightning. I stopped once about an hour in to put on a poncho. Couldn’t quite get it over my swollen Camelbak. Screw it. We don’t need no stinking ponchos. Then it stopped. Some blustery wind gusts kicked up for a while. Made things interesting. Added to the excitement of the moment. I harbored some concern over what was gonna happen down the road, if/when the MMR’s get soaked. That’s just one of many and nothing to be done, so forget about it.

The evening grew dark and all’s well. We’re making decent progress. All systems functioning nominally. Time to get dialed in for the long roll through the night. Make the necessary adjustments. Met a couple of unlucky riders in Plattsmouth who had to bail out. One had vertigo, another with a nasty sliced tire. Both seemed OK. Nothing to be done other than kind words.

Big Muddy on a toll bridge requires a quarter to cross. Carmen gave me one at the starting line. This about when it gets fun. As in Type I. Now, we’re going places. Across the wide expanse of the valley into the lush rolling fields of Iowa all under the cover of darkness.

We’d traversed many of these roads a couple years earlier. They were familiar and even comfortable. Like an old friend who can be cantankerous. We knew where the difficulties were and didn’t have to think much about them.

We’ve talked some before about how the early morning hours can get spooky. Strange lights and sounds. Overnight crossings of interchanges is never going to be fun. A couple long stretches on pavement. Makes sense. We’re crossing a major river valley and an Interstate. One of the longest climbs into a small town called Glenwood. There folks passing in cars, slowing and staring at us as if we were aliens. Maybe we had been abducted or infected by them. Then back into the climby bits. Progress was still good, not great. Everything well within tolerances.

Passed thru Treyner’s firestation somewhere in the middle of the night. They opened their doors and let our filthy selves in. Didn’t catch the name of who was pulling cleanup detail and watching the store. Looked to be pretty high up the food chain. Maybe the captain? An unmistakable boost to our morale to have them here. For communities to welcome us. Makes us feel validated and safe. A humbling experience. Jason Strohbehn’s a favored son in these and parts and it shows by how we’re treated.

Moving out of there and back into the grind of the Iowa country side. Up, down, up, down and up and down and up and down. On and on it goes. Never stopping. The climbs are punchy enough to require effort. On the other side you better make damn sure a proper line’s being kept. We mostly bombed the downhills. The climbs and chunk was slowing us down. We weren’t making good time.

As the hours ticked off it all starts to gradually wear you down. The constant stream of effort and focus means both the body and mind are burning energy. Straining eyes scan the foreground for hazards in the most minute of detail. Eyeglasses fog over from being at dew point. Eventually, just stowed them. Do you want proper eye protection or clarity of vision? What’s worse being in the ditch or having something stuck in your eye? The bugs weren’t bad. With just me and Andy, not a lot of rocks getting kicked up.

Do you ever get that song stuck in your head?, Andy asked. Yeah, but not right now. Could’ve been a Stones song. Can’t Get No Satisfaction. Or maybe ACDC Thunderstruck. Music, especially Classic Rock, is a safe topic out here. Nostalgia can drown the pain.

What do you think about? I’d been riding quiet for some time. I’ll tell you what doesn’t get thought about. That we have 200 miles left to ride. What’s happening right this second gets priority. There are countless issues to contemplate. They seem trivial in a normal context but rise in importance when in the moment. Starting with navigation. I already mentioned vision. Related to that is lighting. How it all gets maintained absorbs all of my attention. Turn the light intensity up on the downhills, back down again once at the bottom. To preserve power. If I do it right, there’ll be plenty for tomorrow night. If I make it that far.

On top of hydration? Filthy bottles caked with who knows what, from the roads. How about some food? Oh shit, watch out. F, almost went down there. Let me get my heartrate back down. How much further to the next stop. Is the drivetrain starting to play a tune? There’s strategy and tactics. When can I stop vs. How can I not stop.

The GPS headset displays the map, heartrate and power metrics. Bike computer has speed and average mph. Rarely do I look at distance traveled. Only when trying to plan a stop. Speaking of GPS, it consumes power too. I already switched on the aux battery about an hour after sunset. That keeps the backlight on. Great for navigating. No missed turns or surprises. More than a feature at night.

Again, not really watching time or distance. What difference does it make? It’s not like there’s another gear or power source for legs. They’re doing what they can do. Leave them alone. Let them do their thing.

Just before the nature trail segment we caught up with Brian. He’d been out ahead of us for an hour or two. Could see the blinky lights appear and disappear in rhythm with the hills.

The three of us rode together for the next hour. It was nice being able to relax, chat and just crank the wheels over that smooth, flat and well maintained trail. Gave the mind a much needed rest from the strain of the downhills.

Once we hit the other side, it was time for another stop, in Shenandoah. Jamie Tracy had his van by the Caseys. This was now the third year I’d seen him out there. His wife Christie had passed through hours before. She eventually was the first woman finisher. I had drop bags there. A change of socks, gloves, head gear and lights. Grabbed the powder for the 2nd half, refilled water tanks, and off again. No time to hang out, even though it would have been nice.

Not long after was the sunrise. That’s always going to bring a boost. This one was foggy and overcast. A kind of gloomy mood seemed to settle in. Underscored the task that lay ahead.

Riding through the Windmill farms was routine. We’d been warned numerous times about the heavy chunk. It was anticlimatic. Didn’t even have to slow down. Well, maybe once. Just more prime Grade A chunk. By then we’d grown fat on a steady diet.

Hamburg, MO. A Great Stop. Maybe the best of the day. Super nice little river town. I was happy to be out of Iowa after 100 miles. We clipped the top corner of Missouri. Enough to say we’d been there. Passed another rider before reaching town. More pavement, and now that the sun burned off the cloud cover, it’s starting to look like a beautiful day. The townfolk were nice considering how awful we looked. They in their Saturday finery and us being not so fresh. Friendly chatter and even some cheers. As if we weren’t Bushwackers riding out from the hills after another night raid.

Beginning to show signs of wear, but you got to keep a happy face. Not that have a nice day fake bullshit happy. What else was there to do? No use complaining. We all knew another 125 miles of Shane’s Little Shop of Horrors awaited us to the finish line.

Rolling out of Hamburg meant crossing the US Hiway 2 bridge over the MO river basin. Not a fun crossing. A long stretch of paved shoulder with no safe egress. This means cars and trucks whizzing past with no escape. We have to trust them. That they’re not out of their minds or distracted by phones. Most got over. Some didn’t. One laid on the horn. Obviously confused. How dare we ride there. Wait a minute. This is not an Interstate. Race or no race, we have every right to the shoulder. Find something to like about it.

In any case this is how I roll. Busy interchanges are the only way into / out of most towns, bicycle infra being what it is. More hazards than you can shake a stick at. Not recommended. Do something often enough and it becomes normal. Probably not OK for you. Fine for me.

Next, we’re back on gravel where we rule. Had a long way along the river headed north into the wind. When Andy and I started working together. We’re not exactly breaking speed records, but not losing more ground. When we helped a rider get his wheel plugged. We’re on the bubble and losing time again. So what. Form Over Function. Not even a hard question. Yes, stop and help the rider.

Afterwards, more work headed north and west as we made our way on a meandering path back to Lincoln. Climbing out of the river basin, more hills and a rising tide of wind from the north.

Upon entering the course with the 150 milers there was a checkpoint at the Arbor Day Trail. A winding, chunky little patch through the park that I could have done without. That’s OK. It’s not always about me. Compounding woes we almost missed the water stop. The 150 riders had long since passed. Their timing strip was being taken down. We stopped and talked to the driver who pointed us back to water.

They waited on us. About half a dozen. Angels lent from a town nearby. I can’t remember their names. They didn’t know exactly who we were either. It didn’t matter. Is there ICE? Yes, we have cooler over there. A water hose was produced along with some snacks that I had no need for. The water and ice were enough. Along with the kindness they showed helping us get our act back together.

About when we started to notice the heat. Nothing bad actually. Just enough to know it gets to play its part. Did I mention hills? No matter, just put that on repeat. Then the MMRs kept us sharp. I did not mind them. Enjoy is a better word.

Trucks and volunteers started to pass by. Need anything? I could use a bottle of cold water. Tear open an LMNT package and pour it in. Shake and down it like some kind of weird gravel junky. Get that salt fix. Could have been refreshing? Take that sun. We win for now.

Speaking of the mind, it becomes trouble ignoring its symphony of pain. Which hurts the most rings the chorus. Or, the question everyone asks, how do you sit that long on a bicycle seat? Well, yeah, exactly. There are workarounds. A good fit, saddle, bibs, chamois and cream of course. Don’t make me tell you my Chamois Butt’r allergy story. I’ll tell you. Just keep asking about butt pain and you’re gonna hear about it. I promise that you won’t like it.

A tolerance develops. There are limits. They can be pushed aside with more training. I didn’t train as much as I would have liked. When you punch it all in the answer becomes maybe not what you want.

To think about it is to grant it power. Push it back. Besides, the neck hurts even more? A riding position for 24 hours isn’t real comfy. We don’t have neck pillows that work on gravel bikes.

What about us the legs beg. You’re not cramping, what do you have to complain about. Been doing hack squats for about a day. Quit your whining. The back ain’t exactly celebrating and the hands are screaming bloody murder. Palms getting cut up. Oh well. What about me poor arms. Don’t we get some love? Shut the f up. You got aerobars. What more do you want. The tummy’s notably absent from this sad song. It really wants to help. But, it’s language of love is cheeseburgers and corndogs and they’re not yet being harvested from the fields.

That’s not Andy getting out further ahead. We’re just letting him think so because it’s good for his ego. Him on one gear speeding away from me on 12.

Probably should’ve broke out the ear buds. The symphony of pain was growing louder.

And still we slow. Everything’s dropping. Power, speed, heartrate. Another 20 miles to the next stop. I need food to replenish. Have another bread ball. It’s Wonderful. Why can’t I swallow it? Spit it out. Can I interest you in another gel pack? Naw, just a salt stick for me, thanks.

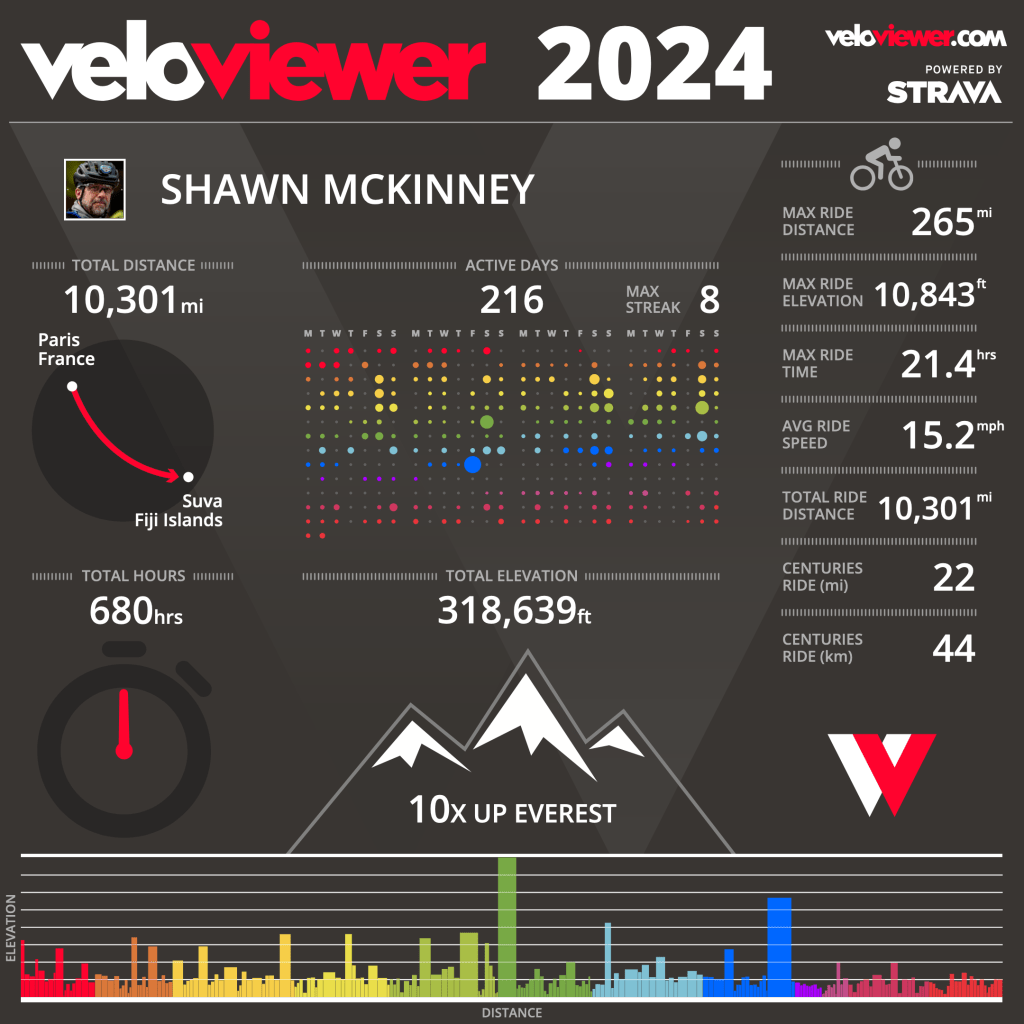

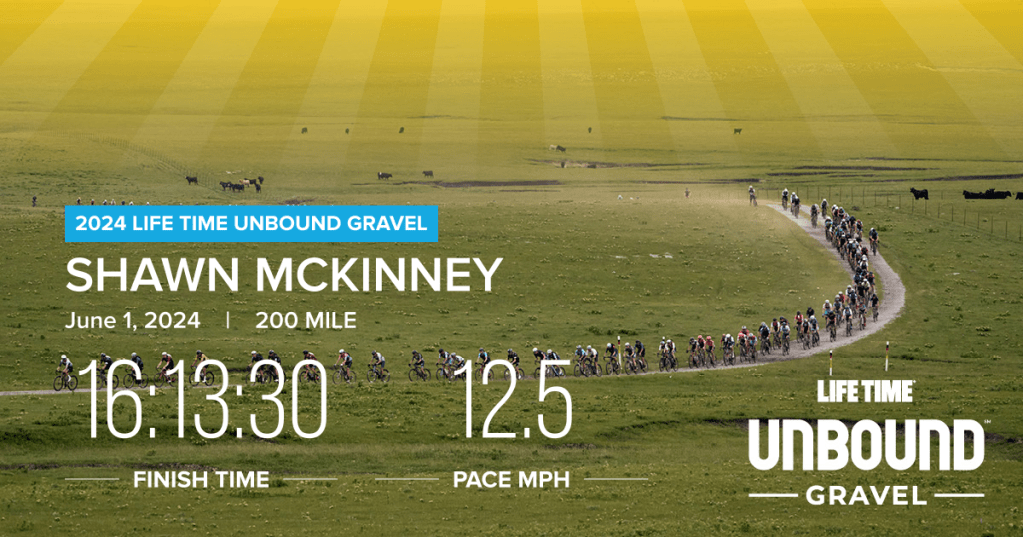

What the hell, am I complaining? We’re on House Money. What with the summer training and health drought. A month earlier couldn’t even say I’d make the trip let alone line up for the 300. Most likely would’ve dropped down to the 150. Like back in ’22. Decided to go for it. With the second century now behind, it’s all good.

Gratitude can be a salve. Sure, the wind, rocks, legs, neck were under constant review. A perfect backdrop into not finishing with zero regrets. Failing the greater challenge’s better than just another finish. These gains must be appreciated and put into perspective.

That spot up on a hill. So green and lush. It offers trees and shade. Looks so nice and peaceful up there. The wind feels like a gentle autumn breeze. Maybe I could stop for a moment. Might find a cookie if I dig around enough. Could answer some of those texts of encouragement that have been coming in.

Who are you calling? Have you found what we’re looking for? Does it matter if the finish line was crossed? Or are the experience and lessons enough? A finish is good but it’s not why we’re here. There’s more. Got to get a hamburger and think about it.

When the Yukon arrived. Didn’t want to mess it up. Told not to worry about it. They’re ferrying riders from western Iowa / eastern Nebraska and back all day and night. Won’t accept anything extra as payment. 3 feet Cycling. Not the last time our paths will cross.

After

Carmen, John were back at my truck. When I learned about Michelle’s concussion. It’s a long story and not really mine to tell. The short version is she’s OK. John PR’ed the 50K. Carmen didn’t get to finish her 75. She found Michelle unconscious and stayed with her until the ambulance came. Later she made sure her bike made it back to Lincoln. She gave up a finish and helped a friend who needed it. I wouldn’t have expected any less from her. That’s how this Gravel Family rolls.

I did get to hang out with Michelle at the finish line. We had that hamburger and waited for Kelly to cross. A muted celebration. I picked up my four pack of Climbing Kites and headed back to the hotel room.

Day After

Andy finished of course. Eleven minutes before the cutoff. I wasn’t there. He got that award for 1500 miles of hell. He says it’s his last. He’s done with single day events and wants to focus on things like the Great Plains Gravel Ride. We had breakfast with his wife Kristy and I had a nice time hearing how they met on a bike ride. If you want to talk to Andy, you best get on two wheels.

Afterwards it was back to KC for another meetup with sisters, Kyle, in from Seattle, and Heather, who lives in Waldo. Onto Rogers for another night hanging with Megan and Cameron. We topped it all off with an ice cream run.

A more perfect ride could not have been asked for. Was it the outcome I wanted? It’ll do.